Differential privacy is a mathematical framework designed to preserve the privacy of individual records when performing computations on large datasets. It provides a formal guarantee that the results of a data analysis do not reveal too much information about any single individual in the dataset.

Image Copyright: https://cltc.berkeley.edu/ | reference artilce : https://cltc.berkeley.edu/publication/new-video-on-differential-privacy-what-so-what-now-what/

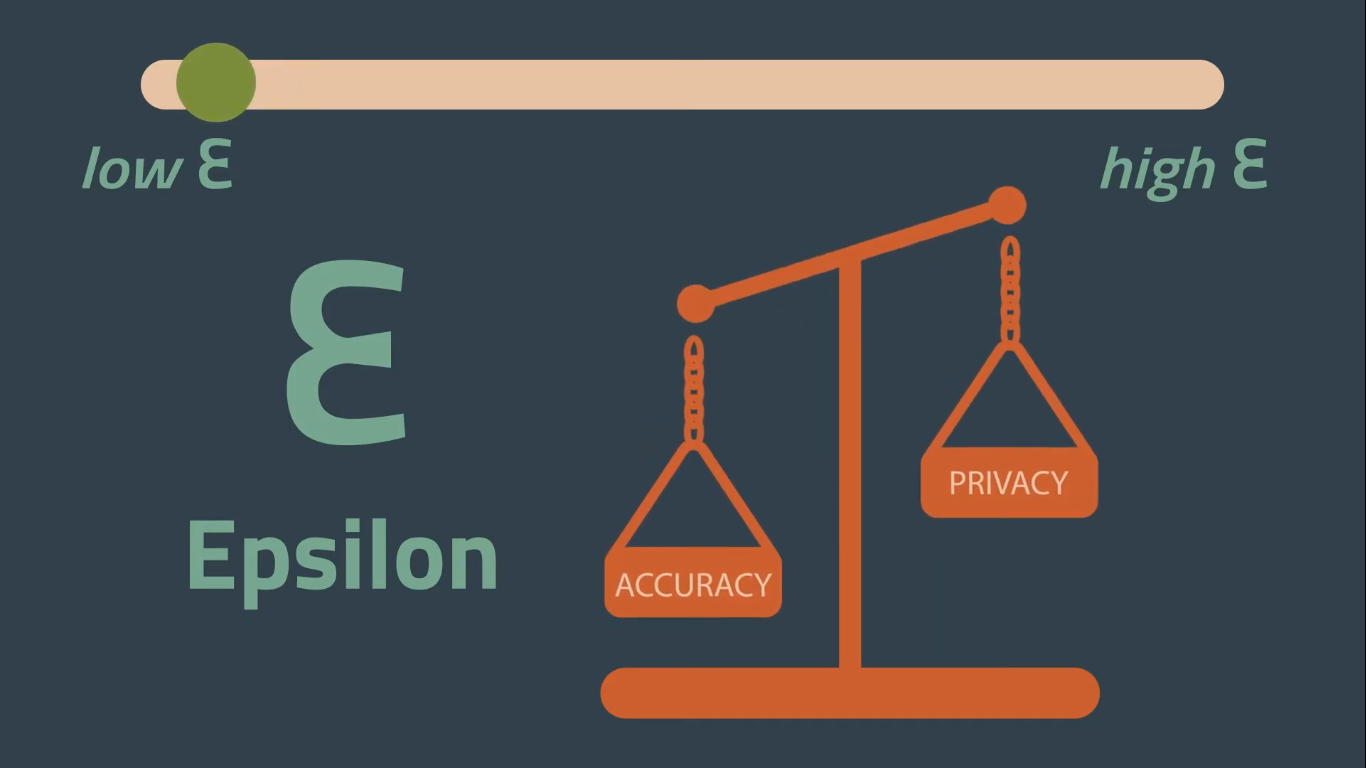

The Greek symbol ε, or epsilon, is used to define the privacy loss associated with the release of data from a data set.

Core Idea:

- In differential privacy, a small amount of carefully calibrated noise (random variation) is added to statistical outputs—like counts, sums, or averages—before releasing them.

- This noise masks whether any specific individual’s data was included or excluded, making it extremely hard for an attacker to learn private details about any single person.

Privacy Parameter (ϵ\epsilon):

- A key concept in differential privacy is a parameter often denoted ϵ\epsilon (epsilon), which controls the level of privacy protection.

- Smaller ϵ\epsilon values mean more noise is added, offering stronger privacy but less precise results. Larger ϵ\epsilon values provide more accurate results but weaker privacy guarantees.

Robust Privacy Guarantee:

-

- Differential privacy aims to ensure that an analysis outcome is “almost the same” whether or not any single individual’s data is included.

- In other words, an adversary seeing the final (noise-added) output cannot reliably deduce any one person’s presence or absence in the dataset—this significantly reduces the risk of re-identification.

Applications:

-

- Tech Companies: Many large tech companies employ differential privacy for collecting and analyzing user data. For instance, they may gather usage statistics or frequency counts while ensuring user-level privacy.

- Government Statistics: Some national statistical agencies (like the U.S. Census Bureau) have adopted differential privacy to protect the confidentiality of census respondents while still publishing aggregate statistics.

- Medical Research: Differential privacy can help safely share summary data (e.g., correlations, means) on patient populations without revealing sensitive patient-level details.

Why It Matters:

-

- Traditional privacy approaches (e.g., removing names or partial identifiers) are increasingly vulnerable to “re-identification” attacks, especially when adversaries have access to external datasets.

- Differential privacy provides a mathematically provable level of privacy protection, giving data analysts a clear, quantifiable measure of how “private” their released data or statistics are.

In essence, differential privacy is all about balancing the need to glean insights from data with the need to protect individual privacy. Its rigorous guarantees have led to it becoming one of the gold standards for privacy-preserving data analysis.